-

Retrouver dansMembres

Retrouver dansMembres Retrouver dansVidéos

Retrouver dansVidéos Retrouver dansChaînes

Retrouver dansChaînes

This website uses cookies to ensure you get the best experience on our website.

To learn more about our privacy policy Cliquez iciPréférence de confidentialité

Blogs Accueil

» La technologie

» Unlock Seamless Data Migration: Move PostgreSQL to YugabyteDB in Just Minutes with Apache Airflow!

-

- Dernière mise à jour 24 oct. 0 commentaire , 15 vues, 0 comme

More from Ava Parker

More in Politics

Related Blogs

Les archives

Unlock Seamless Data Migration: Move PostgreSQL to YugabyteDB in Just Minutes with Apache Airflow!

Posté par Ava Parker

24 oct.

Corps

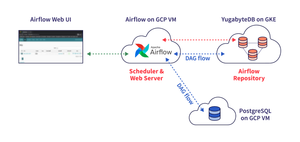

Welcome to the second part of our series on connecting Apache Airflow with YugabyteDB. In our last article, we walked you through setting up Airflow to utilize YugabyteDB as its backend. Today, we will demonstrate how to create an Airflow workflow that transfers data from PostgreSQL to YugabyteDB.

What is YugabyteDB? It is an open-source, high-performance distributed SQL database designed for scalability and reliability, drawing inspiration from Google Spanner. Yugabyte’s SQL API (YSQL) is compatible with PostgreSQL.

Demo Overview

In this article, we will develop a straightforward Airflow DAG (Directed Acyclic Graph) that identifies new entries added to PostgreSQL and transfers them to YugabyteDB. In a future post, we will explore DAGs in more detail and design more intricate workflows for YugabyteDB.

We will cover the following steps:

1. Install PostgreSQL

2. Set up GCP firewall rules

3. Configure Airflow database connections

4. Create an Airflow task file

5. Execute the task

6. Monitor and confirm the results

Prerequisites

Here’s the environment we will use for this tutorial:

- YugabyteDB – version 2.1.6

- Apache Airflow – version 1.10.10

- PostgreSQL – version 10.12

- A Google Cloud Platform account

Note: This demonstration aims to show how to set everything up with minimal complexity. For a production environment, you should implement additional security measures throughout the system. For more details on migrating PostgreSQL data to distributed SQL quickly, visit https://t8tech.com/it/data/migrate-postgresql-data-to-distributed-sql-in-minutes-with-apache-airflow/.

What is YugabyteDB? It is an open-source, high-performance distributed SQL database designed for scalability and reliability, drawing inspiration from Google Spanner. Yugabyte’s SQL API (YSQL) is compatible with PostgreSQL.

Demo Overview

In this article, we will develop a straightforward Airflow DAG (Directed Acyclic Graph) that identifies new entries added to PostgreSQL and transfers them to YugabyteDB. In a future post, we will explore DAGs in more detail and design more intricate workflows for YugabyteDB.

We will cover the following steps:

1. Install PostgreSQL

2. Set up GCP firewall rules

3. Configure Airflow database connections

4. Create an Airflow task file

5. Execute the task

6. Monitor and confirm the results

Prerequisites

Here’s the environment we will use for this tutorial:

- YugabyteDB – version 2.1.6

- Apache Airflow – version 1.10.10

- PostgreSQL – version 10.12

- A Google Cloud Platform account

Note: This demonstration aims to show how to set everything up with minimal complexity. For a production environment, you should implement additional security measures throughout the system. For more details on migrating PostgreSQL data to distributed SQL quickly, visit https://t8tech.com/it/data/migrate-postgresql-data-to-distributed-sql-in-minutes-with-apache-airflow/.

commentaires